A Modest Software Patch: For Preventing LLMs from Being a Burden to Professors, and for Making Them Beneficial to the Academy

A satirical theory-fiction on the transformation of tutors into Turing cops, marking into an imitation game, and AI generated homework into the trigger for the technological singularity

For the first time in four years, I taught at a couple of universities this past semester. I was curious to see if there were any major changes—other than there being less P2 masks, QR codes, and social distancing. Now that the semester is over, it seems to me that the one plainly visible vibe shift is that tutors spend a lot more time with AI on their minds. More specifically, there is an almost incessant suspicion that students are increasingly using ChatGPT, DeepSeek, and other large language models (LLMs) to complete their assignments for them, outsourcing their homework—like their dating life or music taste—to the algorithms.

Take the initial subject orientation meetings: they were no longer simply devoted to running through the course content and devising pedagogical strategies for the classroom. A great deal of time was now taken up going through the shiny new university policies on AI use and abuse. As if determined to match the speed of AI advances, these policies were continually being updated and revised. They were thus open to a certain degree of interpretation and wriggle room. Some subjects sought to work with AI, permitting students to use it for limited tasks like editing their work, but not directly generating any writing itself. Other subjects took a more hardline stance, opting to ban outright any use of AI at all. But even in the latter case, it was clear that at least some students were still relying on AI. A combination of confessions and Turnitin style AI detectors confirmed it.

The fact that these AI detectors are not always reliable doesn’t make things all that much easier for the tutors. On the contrary, it seems that AI’s overall effect is to have created more work for them than ever before. AI is often touted by Silicon Valley tech bros as offering the potential to make our jobs smoother and more efficient, freeing up our time from the drudgery of labor so that we can presumably do the kinds of things they enjoy: longer lifting sessions, more transcendental meditation retreats, and an ever-widening polycule. At least in the case of higher education, however, it has only piled up stacks of additional paperwork on the tutors’ desks. For tutors are expected to perform the same amount of marking in the same time as before while also familiarizing themselves with how the latest chatbot might answer the assignment and remaining as vigilant for its telltale signs as an AI safety researcher with 99% p(doom).

This means that tutors now spend a sizeable chunk of their time not as tutors but as Turing cops, not so much marking as playing imitation games to determine whether they are dealing with a human or an artificial intelligence. If things keep accelerating down this road, tutors will soon go extinct. They will have been effectively reskilled into blade runners, with their primary role being to administer Voight-Kampff tests to their students. The future of the Academy would then just be one big Replicant Detection Unit or Turing Police Headquarters.

Just for fun, I’d like to put forth one modest—if also indecent—proposal. If more and more students are going to use LLMs to phone in their assignments, why don’t tutors mark them with LLMs too? All this would require is one small tweak. Students would still hand in their AI generated assignments as some are already doing. But instead of obsessing like a paranoid protagonist in one of Philip K. Dick’s novels over whether they are interacting with a real human or not, the tutors would simply feed the assignments and the assessment criteria to their own LLMs, prompting them to provide a grade along with some constructive criticism. Could the solution to the problem with which LLMs confront the Academy just be to throw more LLMs at it? If you can’t beat them, join them in becoming biotechnological hybrids or extended minds?

While it might quite rightly seem as if we would be getting pharmakonned by this so-called “solution,” I would like for a little longer to play the devil’s advocate—or perhaps just the devil himself in the flesh—and point out that this modest software update to the Academy might actually be enough to bring about the long heralded technological singularity, if only in an admittedly low def and rather pixelated form. The technological singularity is supposed to describe what happens when machines one day become more intelligent than humans. At that point, they won’t need to rely on us anymore to make improvements to them. They will be able to improve themselves better than any human programmers can. The improved machines would then be able to improve themselves even better again, with the even more improved machines being able to improve themselves even better still. This process might continue indefinitely in a positive feedback loop of recursive self-improvement also often referred to as the intelligence explosion.

In its basic structure at least, this intelligence explosion or technological singularity is not so different from my modest patch to the Academy’s code. For you would effectively have a scenario where students submit AI generated assignments. The tutors would then provide AI generated feedback. The students input this feedback into their LLMs and submit slightly improved AI generated assignments next time. The tutors proceed to input the improved assignments into their own LLMs to provide further feedback. Cottagecore’s and Dark Academia’s retvrn to meatspace daydreams aside, perhaps a realistic best-case scenario for the future of late-stage academia might be that it survives in this way as a poor man’s singularity whereby LLMs generate assignments that are marked by other LLMs in a positive feedback circuit of recursive self-improvement, at least of a kind.

The “dark” in Dark Academia—and perhaps in a kind of “Dark Enlightenment” that might emerge from its midst—would then no longer refer to a nostalgic, trad fantasy, but to something closer to the Dark Factory: entirely automated production sites where robots can work in the shadows. The “dark” in all three shadowzones would just mean lights out for humanity as the machines go full galaxy brain mode. Through a simple combination of AI plagiarism and lazy teaching, then, LLMs might yet be redeemed.

Academia always promised to transcend individual human cognition through collective knowledge production, and now it has. The students and ourselves now reduced to metadata, our identities mere labels slapped carelessly onto the content flowing in both directions...

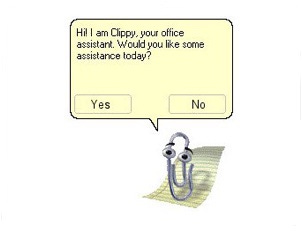

Amazed that you remember Clippy, early evidence of Gates' extreme mothering tendencies... It looks like you're writing a document. Would you like me to f*** around with the formatting for you?